Last week, I tried to “vibe code” something for the first time.

I’ve been using LLMs (usually Claude Code) to help with work for a little while now, but in a very limited (and closely monitored) capacity – asking it to give me the idiomatic version of some bad Typescript I’ve written, or having it write unit test scaffolding, requests like this. I never accepted anything it wrote without reading it and understanding it first, to make sure it’s more-or-less the same as what I would’ve written myself.

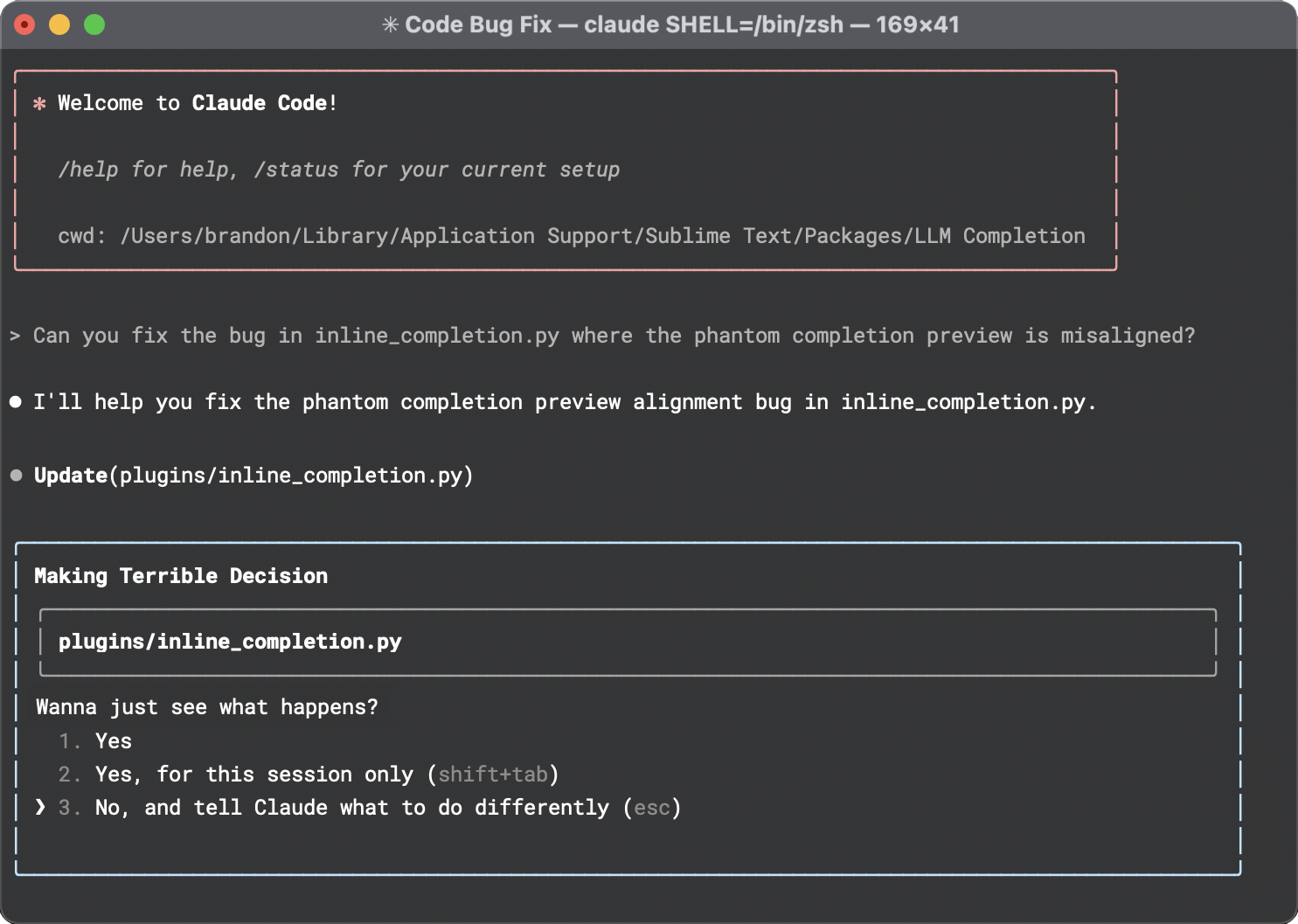

However, more and more I’ve been seeing folks use it in a totally different way: just giving Claude a broad high-level problem or feature request, pointing it at the codebase, turning “auto-accept edits” on, and then leaving it for an hour to go get dinner.

Crazy! This can’t possibly turn out good results… right?

Well, at some point I figured it was probably best I find out for myself, and so the next time I found a problem that was a good fit for it (i.e. a self-contained project, in a language/platform I’ve never used before, which isn’t mission critical infra, etc) – I gave it a try.

Here is the result: https://github.com/pickledish/llm-completion/

The Upshots

To get right to the chase:

- It did work! I now have a Sublime Text plugin that can use an OpenAI-compatible endpoint to generate code completions, just like I wanted. Any time I thought of an improvement, like “we should have a toggle to turn suggestions on/off” or “the plugin shouldn’t suggest anything if the line’s already ended with a semicolon”, all I had to do was ask, and Claude (…usually) figured it out first try, and then asked me to verify. All of this, and I didn’t touch a single line of code in that repository. It still seems pretty magical to me.

However, hidden under that top-line victory are some significant asterisks:

- …but it took a long time. While Claude was able to stand up a technically-functional v1 in just a few minutes, the total amount of time I spent working in Claude Code was several hours, for just a few hundred lines of code. It felt like the old “the last 20% of the work will take 80% of the time”, which is frustrating when you’re the person tapping their foot waiting for it all to just work already.

- …and it was kind of expensive? Those couple of hours weren’t cheap! Claude’s billing is a little opaque (what’s a “cached prompt”?) and Claude Code’s doubly so (it chooses to use a cheaper model… sometimes… for some things?), but it does let you know how much you spent at the end of each session. I wasn’t keeping notes, but I think my total across a few hour-long sessions came out to around $30, which is cheaper than a dev would’ve cost to do it… but this wasn’t so magical an experience that I wouldn’t prefer to have just done it myself and spent that $30 at my local pizza place.

- …and it reached dead-ends often. LLMs have a hard time knowing when to stop digging; my experience in this regard can be summed up by “if Claude doesn’t solve the problem in its first attempt (or maybe two), it’s never going to”. I spent about an hour watching it fumble between

LAYOUT_INLINEandLAYOUT_BLOCKtrying to get the phantom preview to render in the right place – it would try one, I would test it, tell Claude “it didn’t work” with a screenshot, it would respond “you’re absolutely right”, then try the other one, pretty much indefinitely. - …and it REQUIRED examples. In the end, the only way I was able to get Claude out of that CSS-rendering failure loop was to point it (again) at the source for LSP-copilot, a Sublime Text plugin which handles the same problem correctly. After seeing a working example, it was able to get the issue sorted out on its first try. I don’t know if we ever would’ve gotten there without the example.

And the most important caveat:

- I still had to understand the code. There were a number of times Claude tried to take an objectively stupid shortcut to get around a problem, like when it wanted to determine that the text stream from the LLM was done by waiting for 1 second to pass with no more data (instead of fixing its incorrect wrapping of the HTTP response body) – would have added an arbitrary 1 second delay to all completions. I don’t want to think about the confusing mess that would’ve resulted if I didn’t know how to program, and wasn’t at least glancing at the solutions it was trying to implement as it went.

Some Tips

During this process, I did figure out a few ways to get the LLM to behave more usefully and predictably, which I’ll just drop in a little bulleted list here – in case it can save anyone else a few minutes (or in Claude Code’s case, a few dollars):

- Give it several examples upfront that cover what you want it to accomplish

- Tell it to write unit tests for the desired behavior before it writes the implementation

- Make sure it includes an absurd number of debug logs, and feed them back to the LLM

- Restarting a session (i.e. removing context) can help snap it out of tunnel vision

- Throw big parts away and start over if it repeatedly fails to solve a problem

The Future

Now that I have one Claude-Coded project under my belt, will I do any more vibe coding?

For now, my answer is “probably not”.

I did learn a ton from doing this. It’s one thing to hear other people talk about outsourcing half of their job to Claude, but another to find out firsthand what it’s capable of; even a year ago, the thought of an LLM executing a small project like this would have been unthinkable to me, and yet here we are.

And more importantly, it’s clear to me now that the ability to use one of these agentic coding tools effectively requires a distinct skill set from that of regular software development. There’s certainly a good deal of overlap – some may argue that you must already be a good-enough developer before you should even start – but these tools have a learning curve like any other: just because it speaks English doesn’t mean we can treat it like a peer.

But, why no more vibe coding?

Besides it being kinda slow, and kinda expensive… ultimately it just wasn’t any fun. I got into software development because, you know, I like developing software. I have a blog that’s (uh, mostly) about software! I like knowing exactly how things work, and how that knowledge lets me fix things when they break. Bossing Claude around for this project took that away, put me more in the shoes of a product manager – and if I wanted to be a PM, I would be!

I still have been getting a lot of value from Claude Code just using it as I mentioned at the top of this post – code snippets, questions about the codebase, quick-and-dirty code reviews. For these use cases, Claude Code’s value is instant, uncomplicated, and cheap (my average daily cost is under $1).

And either way, I’m both excited and a little wary for what’ll develop in the coming months, as the tools get better and Sonnet-level LLMs get cheaper – my job has already changed a good deal, and this is just the beginning!